Data Visualizations of Star Trek Dialogue

The goal of this project was to explore Star Trek dialogue. I wished to see what words were most commonly spoken by Picard, my favorite character in the series. Creating a word cloud was the most direct way to visualize this data and I chose to use the Python word_cloud library.

Some possible business applications for this type of data analysis could be aggregating review data and determining what words were said in 5 star reviews vs 1 star reviews. This information could be used to determine what is working and what is not working with a product.

Visualizations

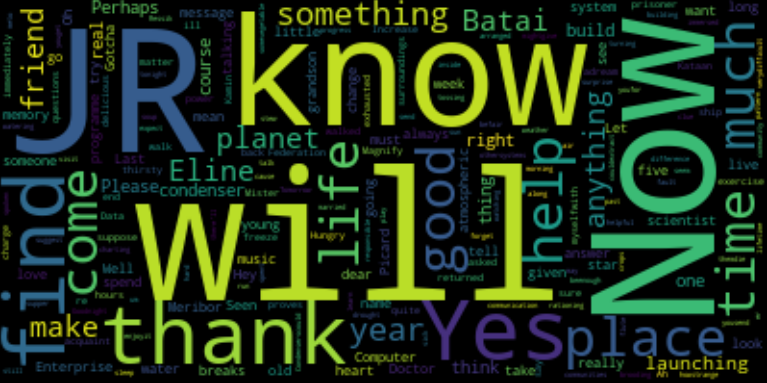

Picard's Words

This is the word cloud for Picard in one of my favorite episodes. Can you guess the episode?

Click for Hint

Look at the proper nouns listed. The data has not been cleaned!Click for Answer

Answer: "The Inner Light" Season 5 Episode 25. Episode 123 in the data. Episode 125 overall.

I removed most character rank or name references to draw out other aspects of TNG dialogue because they dominated the word cloud. Characters often refer to one another by name or rank which caused those words to dominate the word cloud. I removed these words to draw out aspects of TNG dialogue other than who talks to who the most.

stop_words = stopwords.words('english')

["one", "number", "will", "mister", "know", "captain", "well", "want", "data", "worf" , "us", "see", "q", "la forge", "picard", "la", "forge"]

stop_words.extend(new_stopwords)

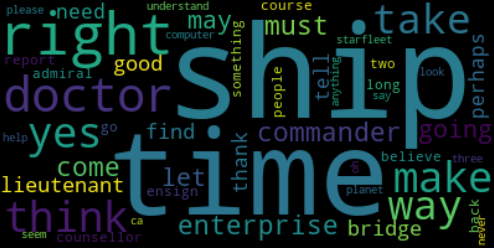

Picard word cloud for all seasons

Picard is the captain and is most concerned about his "ship". He gives many commands that physically move the show from one location to another "make it so", "on the way" or "set course". He is concerned about what is "right" and "good" and is constantly considering the best course of action.

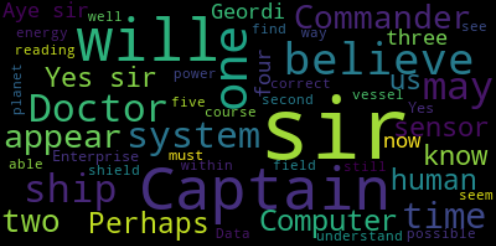

Data word cloud for all seasons

Data uses many words that fit with his characters ambitions: computer, appear, human, time, know, believe. Data theorizes and proves how computers like him may be considered sentient and sapient and explains how machines may evolve just like organic matter if given the right conditions. "computer, appear, human, time, know, believe." Data is an engineer so he refers to the ships functions in many ways: "sensor, reading, ship, vessel, shield." I left in ranks and names because they didn't dominate the word cloud.

Code Examples

listpicardlines=[]

episodes=alllines['TNG'].keys()

#this loop will go through each episode and add Picard's dialogue to the list of Picard's lines.

for i,ep in enumerate(episodes):

episode="episode "+str(i)

if alllines['TNG'][ep] is not np.NaN:

for member in alllines['TNG'][ep]['PICARD']:

listpicardlines.append(member)

#this joins all separate words in list into one object for the word cloud library

allpicardlines = " ".join(line for line in listpicardlines)

#below loop will filter the data for stop words and alpha-numeric characters only

filter_test = []

for w in word_tokens:

if w.lower() not in stop_words: # if w.lower() makes sure stop word list is followed because stop_words all lowercase

filter_test.append(w)

filter_nopunctuation = [word.lower() for word in filter_test if word.isalpha()]How the word cloud was actually generated

wordcloud = wordcloud = WordCloud(max_words=50).generate(finishedstuff)

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.show()Considerations and Improvements

I was expecting to see "stardate" or "log" because Picard always starts an episode with those words.

The letters "ca" are listed as a word. The scope of the project didn't require time spent figuring out what systematic error in the data collection or data analysis produced this error.

Some of the data had words that were combined into one. For example "hadwords" instead of "had words". This was common enough to slightly alter the word cloud in some instances. This data could be separated into individual words but may require scraping the original scripts with newer tools or other methods that are out of the scope of this project.

The methodology could be much stricter and applied equally to every generated image. I slightly tailored each word cloud to present data that would easily be recognizable without massively altering the initial data set because this is a fun project for insight, not a scientific exploration.